If the relationships among artificial intelligence, machine learning, and deep learning were one thing that confuses me the most at the beginning of my data science journey, countless terms in computer vision are not helping with the rest of the journey.

However, there is no way for me to avoid them since drone data are mainly images. Trying to make the computer process and analyse those images like humans is essentially computer vision.

Therefore, I would like to share my understanding of some of the most confusing terminologies I encountered previously.

If I could go back in time and tell the new-to-computer-vision version of me, I will categorise the terms into two groups: result-related and method-related

Result-related terms for Computer Vision (tutorial)

The history of computer vision is inseparable from artificial intelligence. What people are trying to achieve are different levels of human understanding of images. Therefore, there is a lot of vocabulary used to precisely describe each level. (Trust me, this might also be a great way to improve your English if you’re a non-native speaker like me ;))

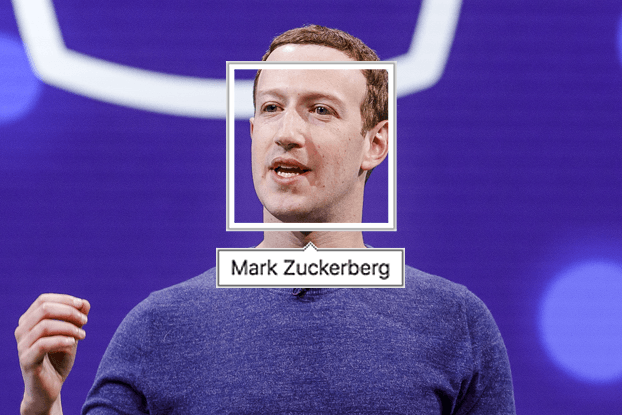

I’d like to use the development of Facebook photo tagging as an example to explain the first three concepts.

Do you remember how exciting it was that you can tag your friends in a photo? In the beginning, all you can do is click your mouse on your friend’s face and say who this is.

1. Detection

Then, it got a little smarter. Before you click, it starts to draw little boxes around faces, or face-like structures. This is detection.

Image detection or object detection is probably the two of the most common phrases once you want to learn something about computer vision. This is pretty straightforward: it detects a type of object. In other words, the computer will go through the image, locate the object and then say yes or no if it’s the type of object we are looking for.

2. Classification

After a while, Facebook published Facebook Page Shop. Now you can tag products as well. To tell differences between products and the good old human faces. It becomes a question of classification.

Classification, as many people said on the internet, is a more advanced version of detection. This is because now the computer is facing multiple types of objects or so-called “classes”. When it finishes detection, it needs to sort different objects into categories. Thus taking a little bit more effort.

3. Recognition

Previously you still need to tell Facebook who this person is or what that product was. Now, it gets so much smarter. Once you’ve uploaded an image with your friends, Facebook will automatically circle the familiar faces and then suggest a name right away. This is recognition.

Recognition, as in recognising individuals, is trying to distinguish each unique instance within the same category. It is the most complicated process among all three. Our brains have been trained to recognise faces since we were born with new input data every day and we still mistake some people for others once in a while. You can imagine how difficult it can be for a machine.

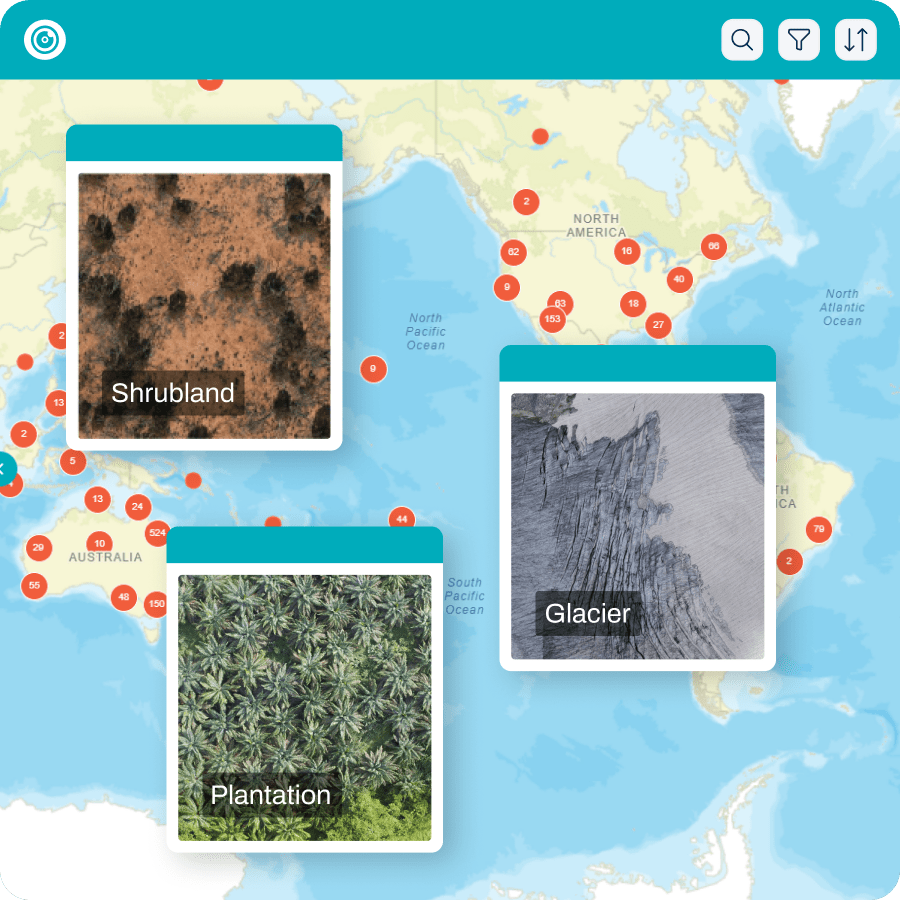

Method-related terms

Now if we take a step back, there are also terms related to the tagging process in computer vision. The tagged photo and notifications are the results we get. But let’s not forget that what enables social media to do so is because we are helping them to build up enormous training data by clicking and typing names around the faces.

Tagging, or more often known as “labelling”, is the process that using text to describe images and make them more understandable for the computer so that people can train machine learning models based on these labels.

Again, the tech terms do not make it easy for beginners. Let’s look at another four commonly used words and phrases that are related to the tagging method.

Consider this image below (the two kittens that I fostered who can never get enough of each other), there are four different ways of labelling them.

4. Classification

Yes, we come across the exact same word again. Although it’s a relatively more complicated process when analysing the image, in the labelling process, it’s the simplest term. It’s a simple “yes” or “no” for each image you are going to label.

In this case, yes there are cats in this picture.

5. Bounding boxes

The bounding box is the next step by locating the object in the images. As you can see from the image below. The kittens are circled out by the green boxes.

6. Semantic Segmentation

Sometimes, people are not satisfied with the rough location of a box. There are still a lot of pixels within the boxes that are not part of the cat. Therefore, semantic segmentation is a precise polygon that traces around the boundaries.

7. Instance segmentation

Yet semantic segmentation only tells you the categories. Instance segmentation can provide a pixel-wise mask on each individual instance, which make the label most precise.

Either it’s a result-related or a method-related term, we all have a similar dictionary when we are dealing with drone imagery. Hope today’s explanation with our Computer Vision Tutorial can help you to clarify a little bit more about this topic.