Move over ChatGPT, there’s a new AI kid on the block! Just this week MetaAI released a new tool called ‘Segment Anything’ (Meta is best known as the Facebook parent company). And it’s unleashed the same fears and excitement as many tech tools before it. As someone who has been segmenting Earth observation imagery for more than two decades, I am firmly planted in the excited camp! This is one of the coolest image analysis tools I’ve seen in a long time. So can we use Segment Anything for drone imagery? Let’s check it out! First the eye candy, then scroll on for all the details.

What is segmentation?

Segmentation is something that the human brain does exceptionally well. Look around you. Can you see the edges of different objects that help you detect when one object finishes and another one starts? Like perhaps a tea cup on the table, shoes on the floor? Thankfully this is something we do really easily, otherwise our daily life would be exhausting!

But for computers looking at an image of that same table or floor, it’s not so simple to detect the different objects. Edges get blurry and ill defined. And is the title on the cover of the book a different object? Of course the human brain would say no, but the computer isn’t necessarily so smart.

Segmentation algorithms either cut images up into regular shapes (e.g. a grid), or they try to imitate human vision by detecting objects and their edges. In simple terms, they find objects by looking for areas of high contrast between adjacent pixels.

If you’ve ever used a virtual background in Zoom or Teams, you’ve been segmented! The algorithm looks for our edges so that it can remove everything that’s not us and replace it with a different background. That’s why green screens are so effective. As long as we aren’t wearing bright green clothes, it’s really easy for the computer vision to detect the difference between the people or other objects we want to see and the green background.

It’s this object-based segmentation from MetaAI in ‘Segment Anything’ that’s most exciting to me when working with drone imagery.

Why is segmentation interesting for Earth observation?

Often when we extract information from images, we do so by creating generalised categories (e.g. forest, water). We then classify components of the image into one of these categories. We can do this via ‘pixel-based’ or ‘object-based’ algorithms.

Pixel-based techniques assess pixels based on their ‘colour’. For example, grass is green, the road is black. However, object-based techniques first group similarly coloured adjacent pixels together into an object or segment. We then use algorithms that look at colour, texture, shape, size, context, and shadow to identify that object. Because of this, object based techniques are better at mimicking the way the human brain understands the environment. Not every green thing in drone imagery is grass!

Why is MetaAI’s ‘Segment Anything’ tool so interesting for drone imagery?

I don’t believe that ‘Segment Anything’ does anything that hasn’t been done before. It just does it better and faster. With a super simple user interface.

I first started testing segmentation for aerial and satellite imagery using eCognition in 2000, though much later for drone imagery. At the time it was a unique (and exceptionally expensive) piece of software for Earth observation. The main players in remote sensing software have all added segmentation tools to their offerings over the years, but eCognition always seemed to stay a few steps ahead.

But that doesn’t mean to say that it’s easy to use. I attended a five day course in 2009 to learn how to use it properly and I still only scratched the surface. I dip back into it now and then, refer to my manuals and wondered why there hasn’t really been any significant innovation in this space for so long.

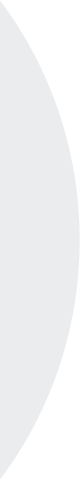

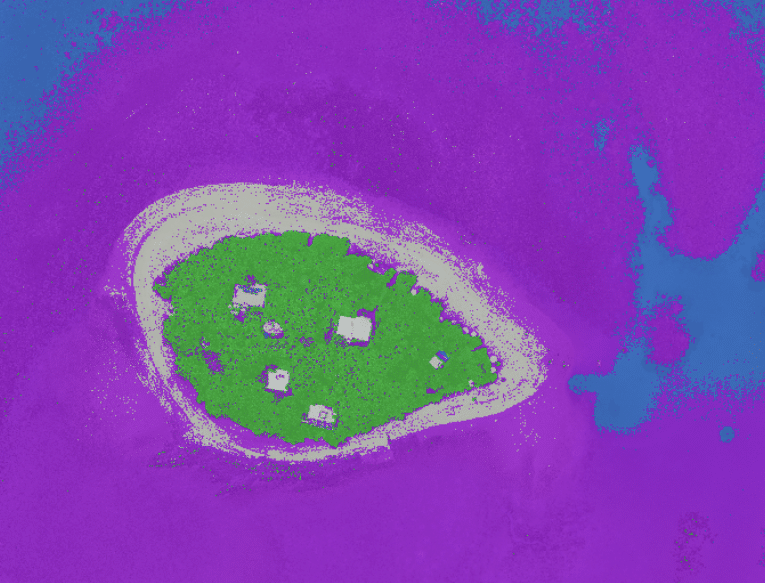

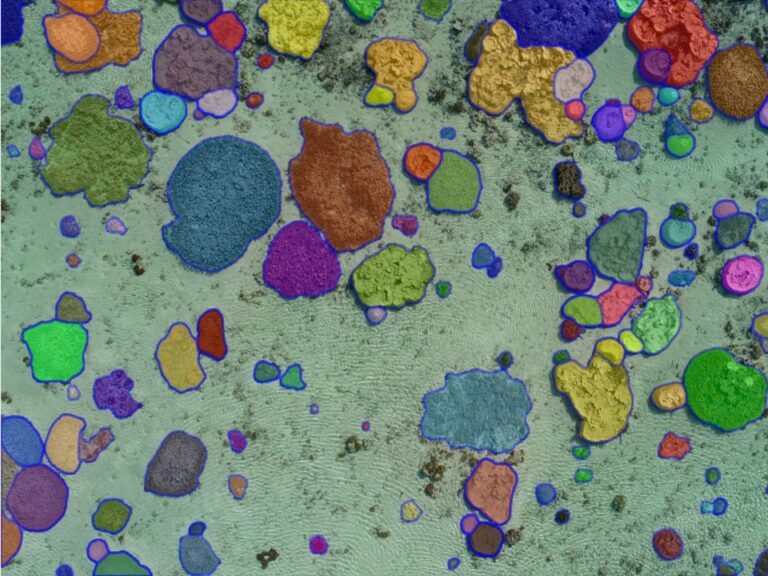

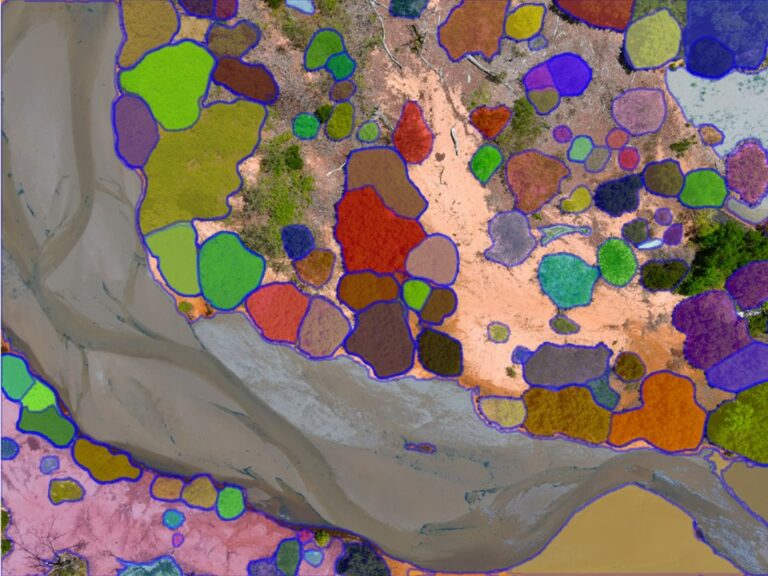

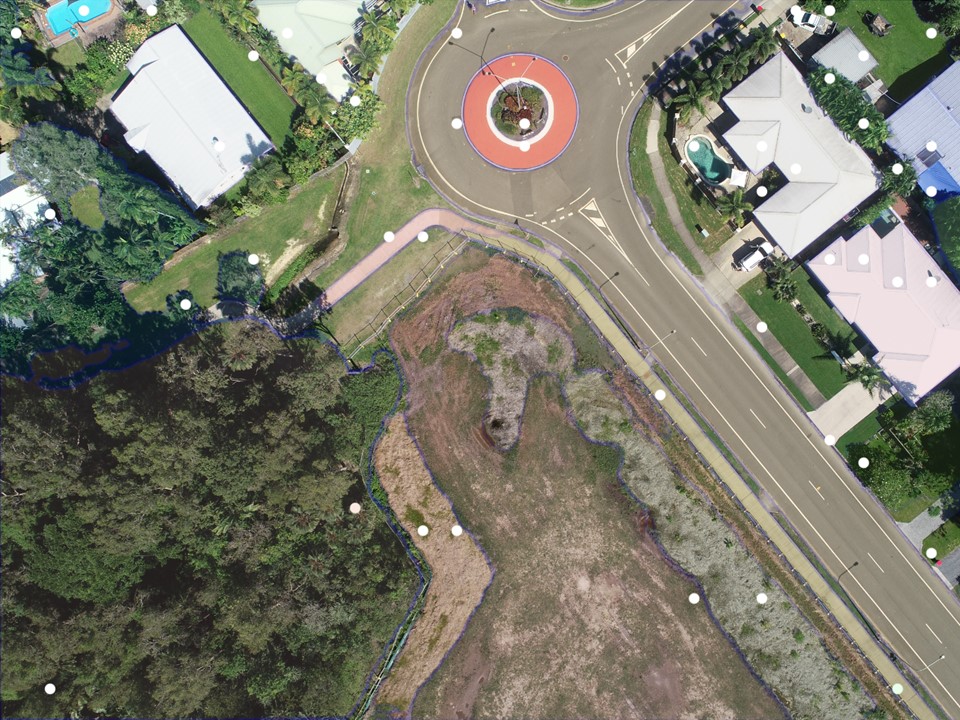

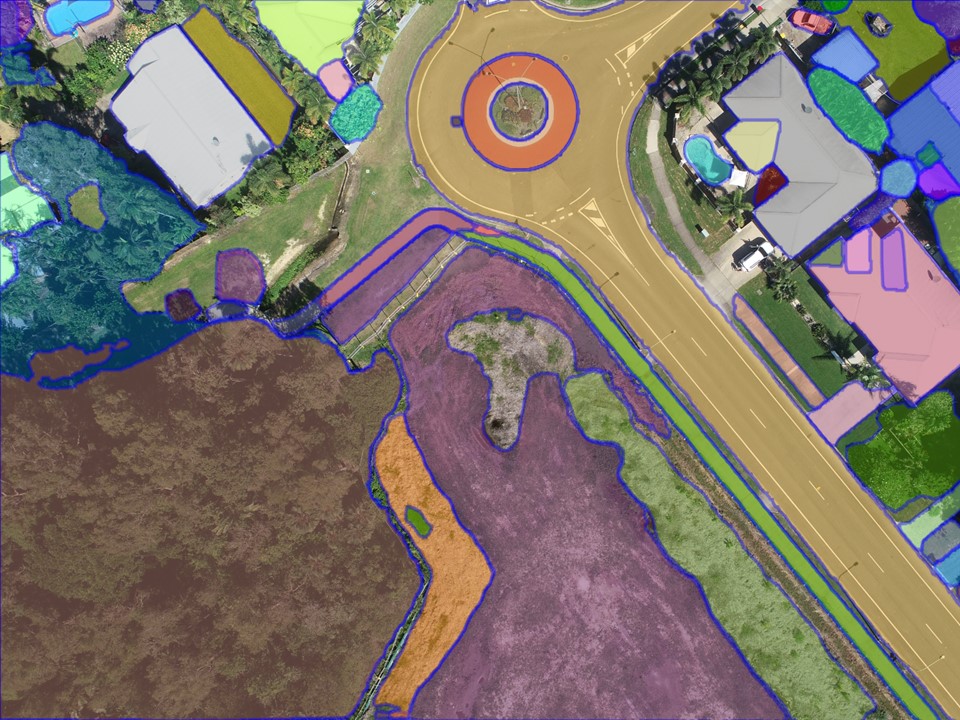

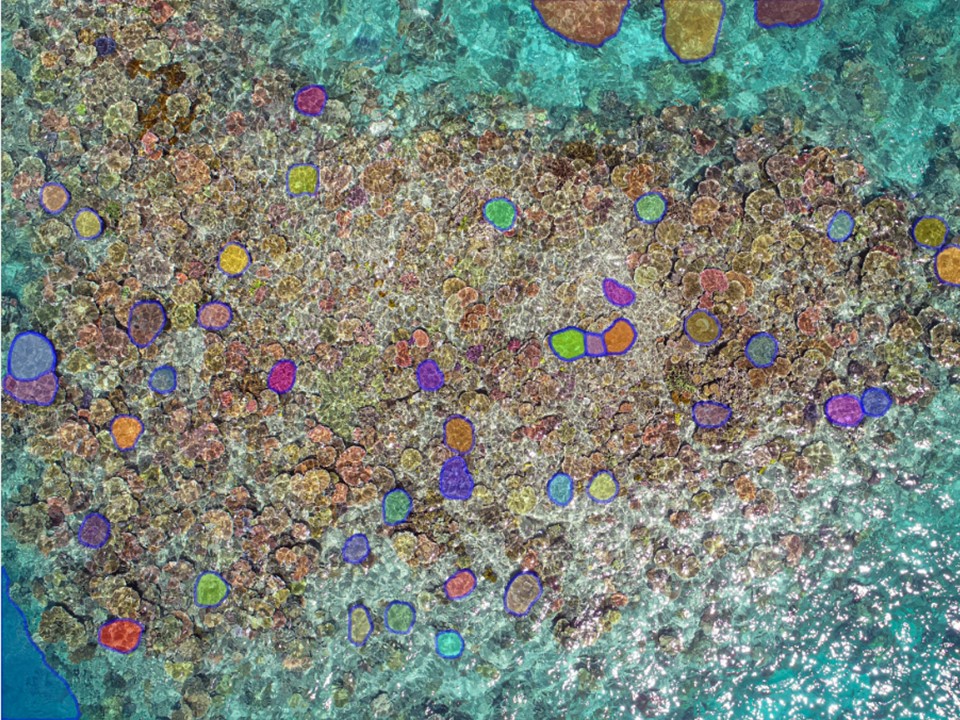

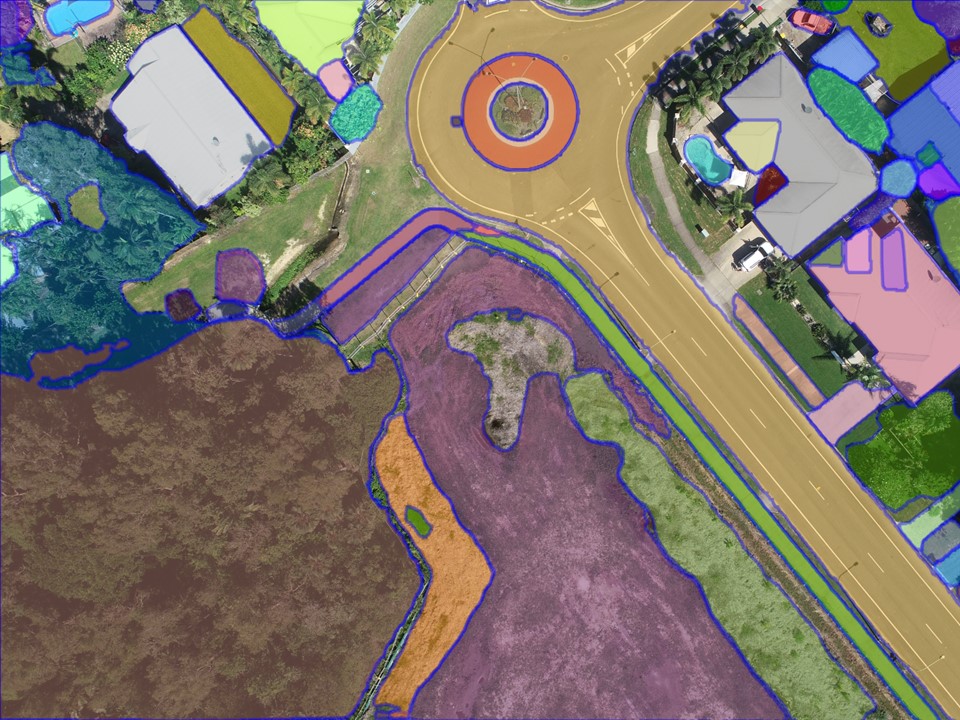

Until now. The simplicity of Segment Anything and the results are ridiculously cool. Check out the examples below with drone imagery to see for yourself. The first pair of images on the Great Barrier Reef was captured at 20 m altitude, while the second is a coastal environment from 100 m.

Segmented image

Segmented image

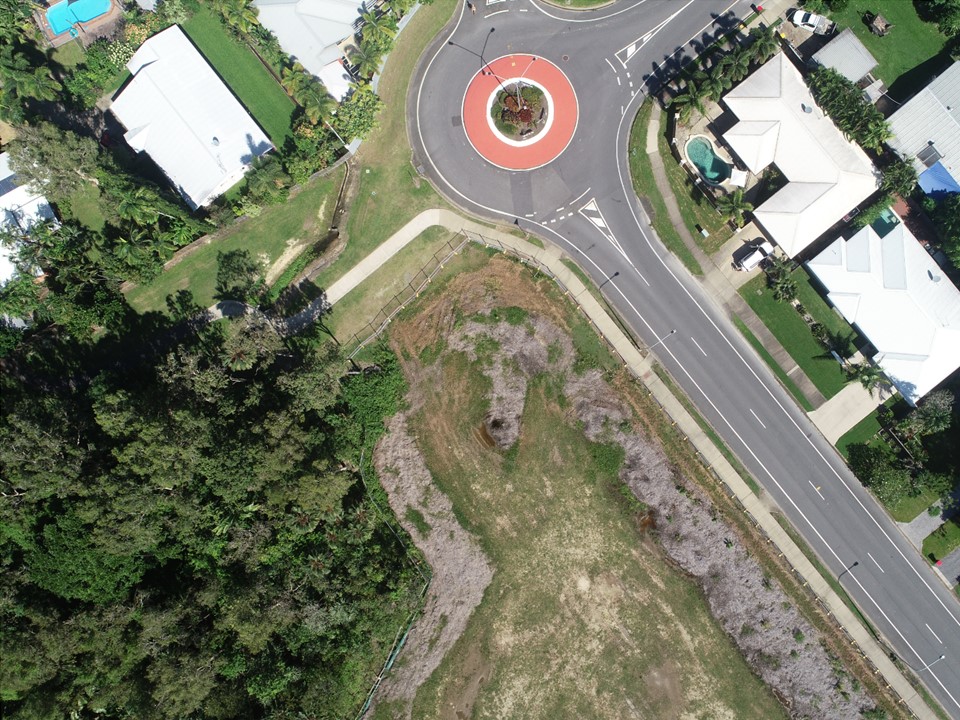

Original image

Original image

Segmented image

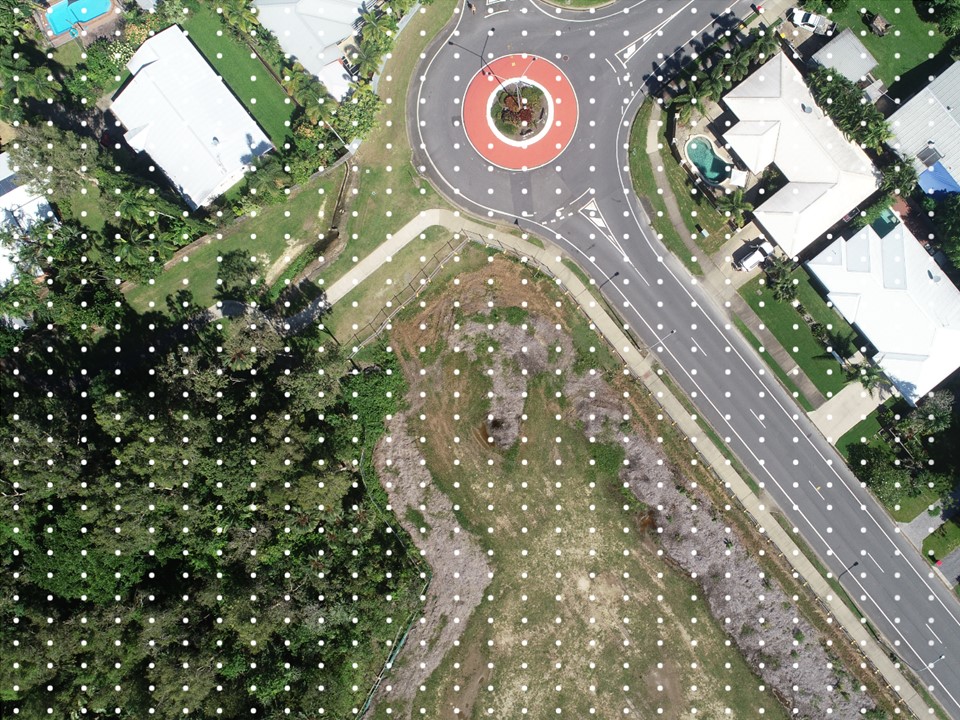

Segmented image

Original image

Original image

How does Segment Anything work?

In the most basic terms, conceptually the segmentation is a four step process:

- The software ingests the image (drone imagery in this case).

- It overlays a grid of 32 x 32 points looking for some target features.

- It finds key points (serious smarts here) and removes the rest of the grid.

- It ‘grows’ out from its key points until it reaches a threshold of ‘change’ in pixels (more smarts). This represents areas of increased contrast or the ‘edge’ of objects / segments and creates polygons to represent the segments.

There’s also some smoothing that goes on so that the segment edges don’t look too pixelly. The result is a pretty clean image with borders around different features in the image. It sort of looks like a colouring in poster.

Is this the same as a classification?

No, segmentation is just step towards classifying an image. In the image sequence above, you can see that the segments are all different colours. These colours have absolutely nothing to do with the feature on the ground. The software has no idea what each segment represents. Also at this point it can’t tell if one segment is the same ‘type’ of segment as another. For example, there’s more than one house in the drone image, but it doesn’t understand that.

I’ve seen many people on social media express amazement that Segment Anything works on so many different ‘types’ of images. Satellite, aerial, underwater, nadir, oblique, indoors, outdoors, MRIs… However the type of image is irrelevant, as long as there is contrast between the features within the image. Remember, it’s not ‘trained’, and is not identifying these features, just the fact that they exist.

So the amazing thing is that previously this segmentation step was really challenging. Therefore, simplifying this stage means that we can get further along the analytics process, faster. That means greater insights from our Earth observation data and ultimately better outcomes for monitoring and management.

It doesn’t mean you need to be fearful of losing your job. Unless of course your job was manually segmenting images! In which case, time to upskill to the next stage 🙂 Have some fun with labelling!

Does spatial resolution matter in image segmentation?

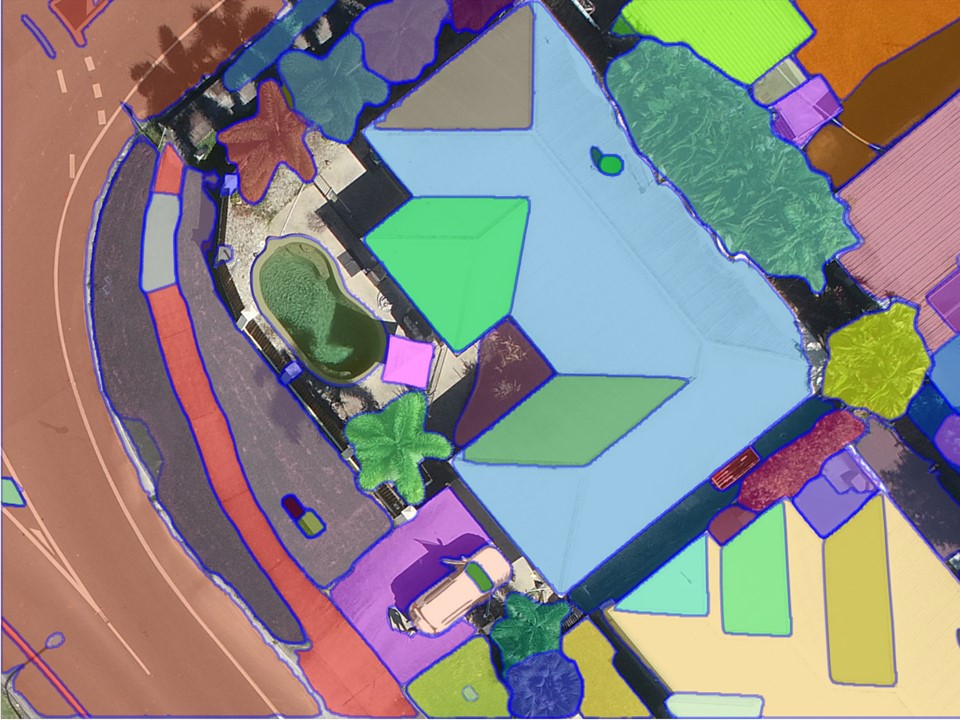

Yes, spatial resolution is an important consideration, as is the image size. Take for example the second image carousel below. This represents a subset taken from the top right of the previous sequence. Hopefully you can recognise the house and the pool.

Both sequences have the same 32 x 32 dot grid overlaid. But because the second sequence covers a smaller area, this means that there are more dots per unit area. So while the resolution of the image itself hasn’t changed, the resolution of the output segments has.

The second sequence now includes individual palm trees, a letterbox, a car and its windscreen.

I have not yet delved deep into the codebase to see how to change the segment scale – here I’ve used the demo tool.

How does this make life easier?

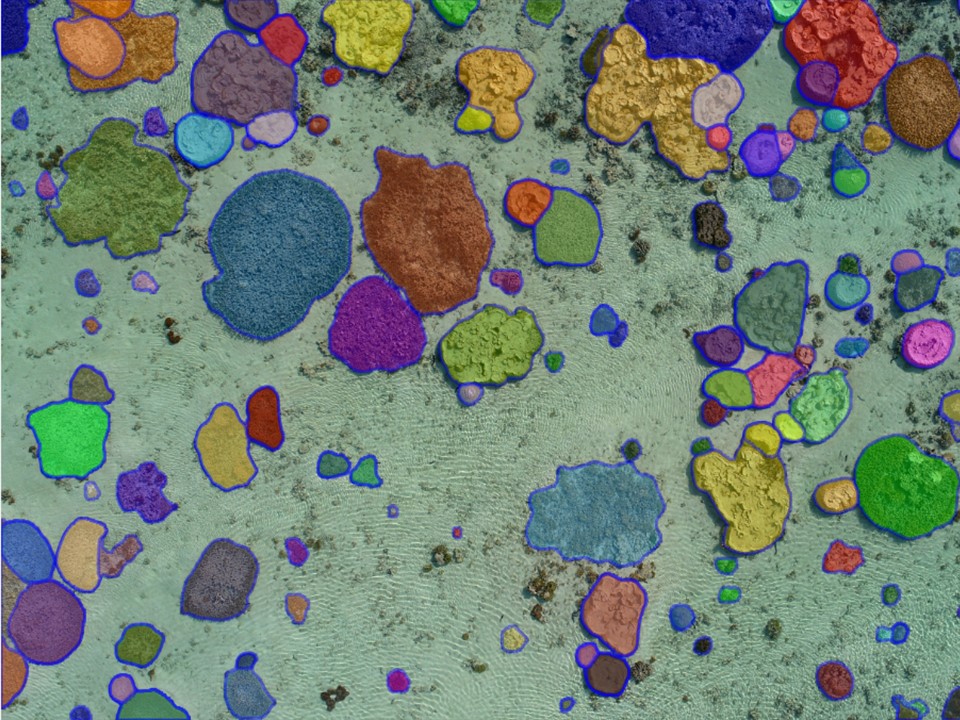

Training an AI model is time consuming and repetitive. As an example, in 2021 our Earth observation data scientist Joan Li labelled thousands of sea cucumbers in drone imagery to train an algorithm to identify them (see the publication here). I quickly ran a subset of one of the images through Segment Anything, and our friends are picked up really nicely! Note that although it may look like it, this is not a classification, we would still need to label the animals. But at least it has found them in the first place!

Image segments

Image segments

Original images

Original images

When does it work to use Segment Anything for drone imagery?

Let’s return to the eye candy gif from the top of the blog. Using these aerial drone images I’ll show you some examples of where it works better than others.

This is a picture perfect example of Segment Anything doing a brilliant job with drone imagery. The corals have very high contrast with their surrounds and are therefore segmented well. It doesn’t detect very small features such as patches of rubble, algae, and sea cucumbers. But that’s just the scale of the imagery and can be remedied by serving in a small subset.

Throw in some less than ideal conditions on the reef, and Segment Anything has greater difficulty. The ‘noise’ of the speckly surface water presents a challenge. Without the clear contrast between features, it misses many segments that a human might otherwise detect. But it’s still actually better than I expected!

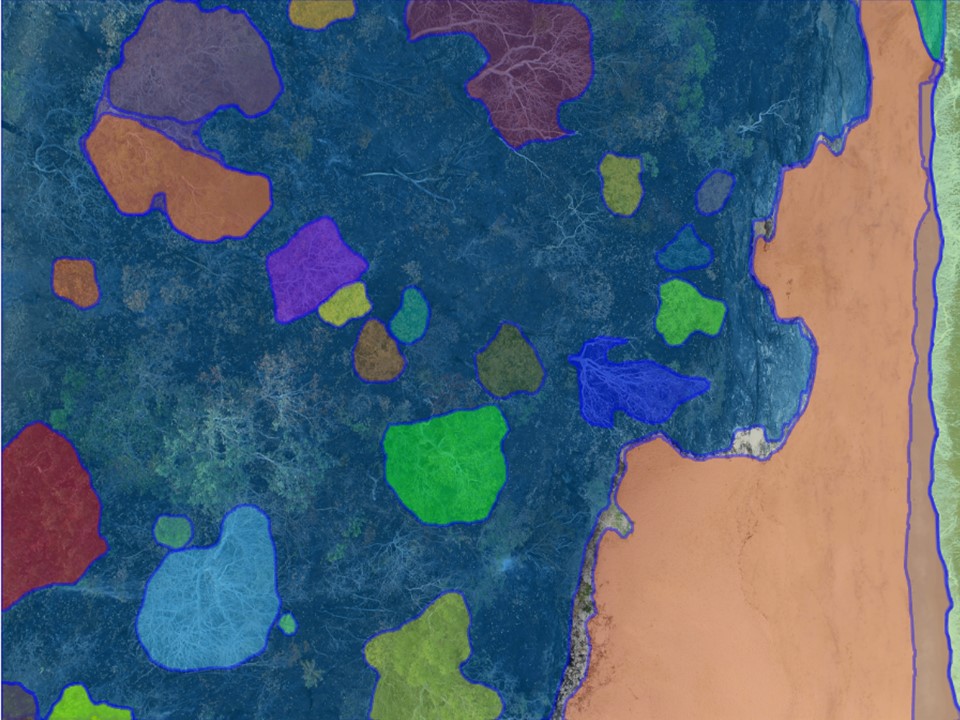

Segment Anything for drone imagery over mangrove ecosystems works well in this example! It has clearly detected the difference between the dead mangrove patches and their surrounds. This is amazing for environmental monitoring!

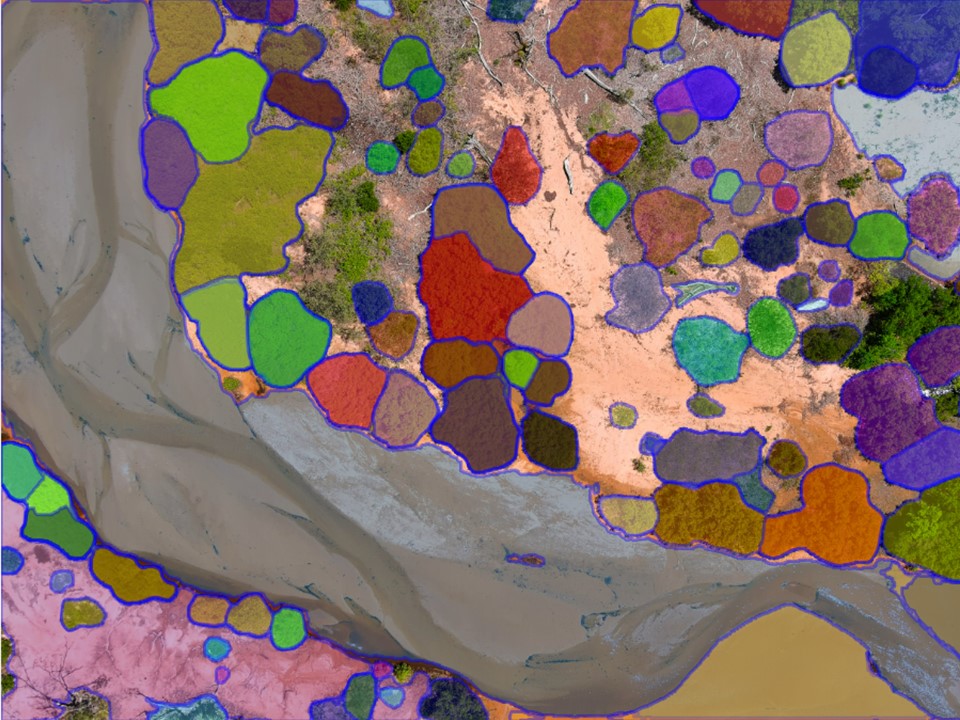

The tool easily segments the water and beach from the land. But it has some difficulty with the lack of contrast between the burnt ground and the trees on the land. If we wanted to use Segment Anything for drone imagery that looks like this, we’d need to do some tweaking.

The algorithm works beautifully to detect the edges between patches of trees and the sand dunes. The creek bed is a single segment, which could be seen positively or negatively. If you’re interested in the finer features of the creek, then this segmentation is too coarse. But as general segments, it has performed beautifully!

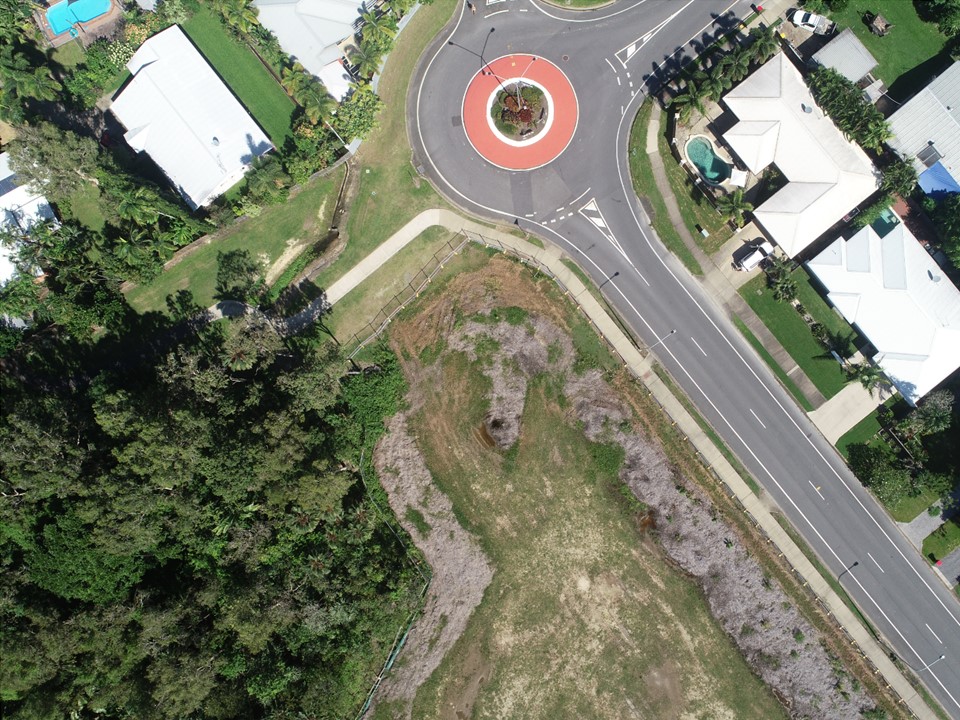

The high contrast in urban environments is perfect for Segment Anything. We can see individual houses, roads, swimming pools with clarity. At this scale it doesn’t detect cars or other smaller objects.

Final thoughts…

If you haven’t yet had a play with Segment Anything, I highly recommend it! You can even download drone imagery from GeoNadir to test it out. But also don’t expect it to be perfect. You might have noticed from the very first animation in this blog that some of the example images have better results than others. And the work doesn’t stop here… Now the labeling fun begins! I’m definitely looking forward to playing more with Segment Anything for drone imagery.

If you enjoyed this blog, you might also like to check out how to use GeoNadir to share insights from drone mapping data.